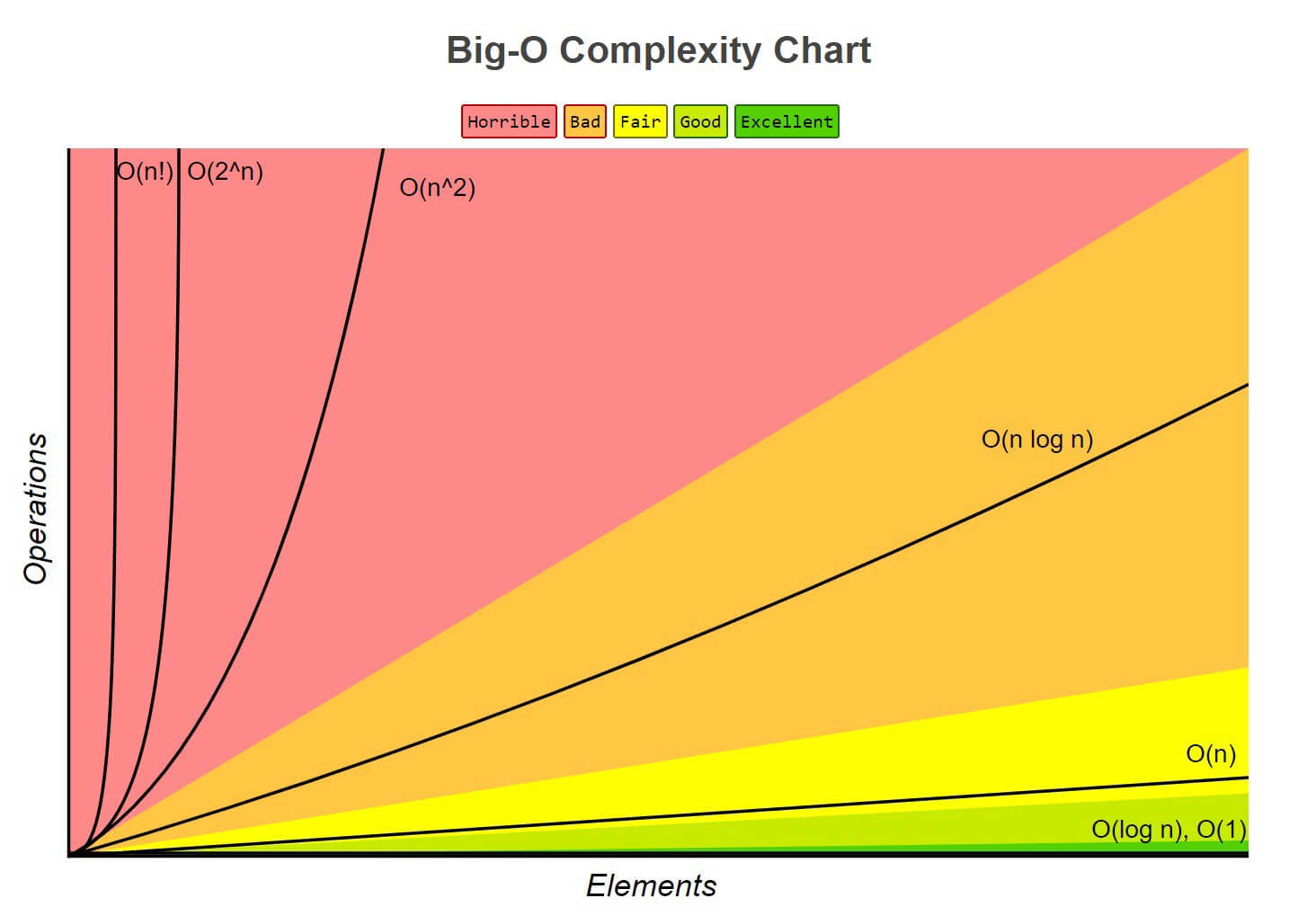

In many cases the “O” of an algorithm will fall into one of the following cases:

O(1)– Constant complexity. Time to complete is the same regardless of the size of input set. An example is accessing an array element by index.1 item: 1 second10 items: 1 second100 items: 1 secondO(log n)– Logarithmic complexity. Time to complete increases roughly in line with the log (n). For example 1024 items takes roughly twice as long as 32 items, because Log2(1024) = 10 and Log2(32) = 5. An example is finding an item in a binary search tree (BST).1 item: 1 second10 items: 2 seconds100 items: 3 seconds1000 items: 4 seconds10000 items: 5 secondsO(n)– Linear complexity. Time to complete that scales linearly with the size of the input set. In other words if you double the number of items in the input set, the algorithm takes roughly twice as long. An example is counting the number of items in a linked list.1 item: 1 second10 items: 10 seconds100 items: 100 secondsO(n log n)– Time to complete increases by the number of items times the result of Log2(N). An example of this is heap sort and quick sort.O(n2)– Quadratic complexity. Time to complete is roughly equal to the square of the number of items. An example of this is bubble sort.1 item: 1 second10 items: 100 seconds100 items: 10000 secondsO(n!)– Time to complete is the factorial of the input set. An example of this is the traveling salesman problem brute-force solution.