Table of Contents

1. Overview

In the absence of necessary synchronizations, the compiler, runtime, or processors may apply all sorts of optimizations. Even though these optimizations are beneficial most of the time, sometimes they can cause subtle issues.

Caching and reordering are among those optimizations that may surprise us in concurrent contexts. Java and the JVM provide many ways to control memory order, and the volatile keyword is one of them.

In this article, we’ll focus on this foundational but often misunderstood concept in the Java language – the volatile keyword. First, we’ll start with a bit of background about how the underlying computer architecture works, and then we’ll get familiar with memory order in Java.

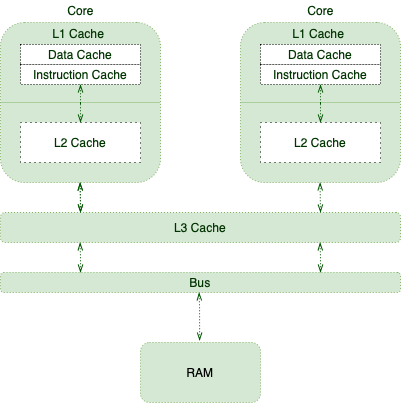

Processors are responsible for executing program instructions. Therefore, they need to retrieve both program instructions and required data from RAM.

As CPUs are capable of carrying out a significant number of instructions per second, fetching from RAM is not that ideal for them. To improve this situation, processors are using tricks like Out of Order Execution, Branch Prediction, Speculative Execution, and, of course, Caching.

This is where the following memory hierarchy comes into play:

As different cores execute more instructions and manipulate more data, they fill up their caches with more relevant data and instructions. This will improve the overall performance at the expense of introducing cache coherence challenges.

Put simply, we should think twice about what happens when one thread updates a cached value.

3. When to Use volatile

In order to expand more on the cache coherence, let’s borrow one example from the book Java Concurrency in Practice:

public class TaskRunner {

private static int number;

private static boolean ready;

private static class Reader extends Thread {

@Override

public void run() {

while (!ready) {

Thread.yield();

}

System.out.println(number);

}

}

public static void main(String[] args) {

new Reader().start();

number = 42;

ready = true;

}

}

The TaskRunner class maintains two simple variables. In its main method, it creates another thread that spins on the ready variable as long as it’s false. When the variable becomes true, the thread will simply print the number variable.

Many may expect this program to simply print 42 after a short delay. However, in reality, the delay may be much longer. It may even hang forever, or even print zero!

The cause of these anomalies is the lack of proper memory visibility and reordering. Let’s evaluate them in more detail.

3.1. Memory Visibility

In this simple example, we have two application threads: the main thread and the reader thread. Let’s imagine a scenario in which the OS schedules those threads on two different CPU cores, where:

- The main thread has its copy of ready and number variables in its core cache

- The reader thread ends up with its copies, too

- The main thread updates the cached values

On most modern processors, write requests won’t be applied right away after they’re issued. In fact, processors tend to queue those writes in a special write buffer. After a while, they will apply those writes to main memory all at once.

With all that being said, when the main thread updates the number and ready variables, there is no guarantee about what the reader thread may see. In other words, the reader thread may see the updated value right away, or with some delay, or never at all!

This memory visibility may cause liveness issues in programs that are relying on visibility.

3.2. Reordering

To make matters even worse, the reader thread may see those writes in any order other than the actual program order. For instance, since we first update the number variable:

public static void main(String[] args) {

new Reader().start();

number = 42;

ready = true;

}

We may expect the reader thread prints 42. However, it’s actually possible to see zero as the printed value!

The reordering is an optimization technique for performance improvements. Interestingly, different components may apply this optimization:

- The processor may flush its write buffer in any order other than the program order

- The processor may apply out-of-order execution technique

- The JIT compiler may optimize via reordering

3.3. volatile Memory Order

To ensure that updates to variables propagate predictably to other threads, we should apply the volatile modifier to those variables:

public class TaskRunner {

private volatile static int number;

private volatile static boolean ready;

// same as before

}

This way, we communicate with runtime and processor to not reorder any instruction involving the volatile variable. Also, processors understand that they should flush any updates to these variables right away.

4. volatile and Thread Synchronization

For multithreaded applications, we need to ensure a couple of rules for consistent behavior:

- Mutual Exclusion – only one thread executes a critical section at a time

- Visibility – changes made by one thread to the shared data are visible to other threads to maintain data consistency

synchronized methods and blocks provide both of the above properties, at the cost of application performance.

volatile is quite a useful keyword because it can help ensure the visibility aspect of the data change without, of course, providing mutual exclusion. Thus, it’s useful in the places where we’re ok with multiple threads executing a block of code in parallel, but we need to ensure the visibility property.

5. Happens-Before Ordering

The memory visibility effects of volatile variables extend beyond the volatile variables themselves.

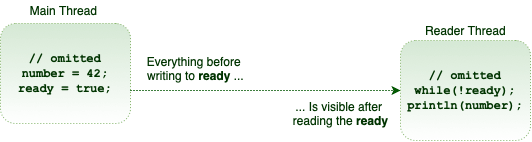

To make matters more concrete, let’s suppose thread A writes to a volatile variable, and then thread B reads the same volatile variable. In such cases, the values that were visible to A before writing the volatile variable will be visible to B after reading the volatile variable:

Technically speaking, any write to a volatile field happens before every subsequent read of the same field. This is the volatile variable rule of the Java Memory Model (JMM).

5.1. Piggybacking

Because of the strength of the happens-before memory ordering, sometimes we can piggyback on the visibility properties of another volatile variable. For instance, in our particular example, we just need to mark the ready variable as volatile:

public class TaskRunner {

private static int number; // not volatile

private volatile static boolean ready;

// same as before

}

Anything prior to writing true to the ready variable is visible to anything after reading the ready variable. Therefore, the number variable piggybacks on the memory visibility enforced by the ready variable. Put simply, even though it’s not a volatile variable, it is exhibiting a volatile behavior.

By making use of these semantics, we can define only a few of the variables in our class as volatile and optimize the visibility guarantee.

6. Conclusion

In this tutorial, we’ve explored more about the volatile keyword and its capabilities, as well as the improvements made to it starting with Java 5.

As always, the code examples can be found over on GitHub.